When we talk about asynchronous programming, the first thing we must do is understand a series of terms and “buzzwords” like asynchrony, concurrency, and parallelism.

I already said that the world of asynchrony is complicated, I’m not going to lie to you. In fact, the topic could easily be its own course. Which, logically, is not the purpose of this article.

But, as a programmer, there are certain terms that should sound familiar to you. And I can already tell you that these are very related words, and we could even say that they are a bit mixed up and tangled with each other.

Don’t worry, I plan to be a bit tedious about the differences between them. In the end, they are just words. But what is important is to know the concepts and what each of them is, even if only briefly.

So let’s start with the most basic, the synchronous process 👇

Synchronous and Blocking Process

A synchronous and blocking process is one that executes sequentially, waiting for each operation to finish before starting the next.

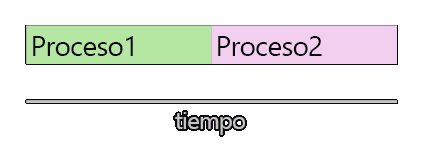

That is, if within your program you had two tasks, “process 1” and “process 2”. Process 2 would wait for process 1 to finish, and would start immediately after its completion.

In this case, the processes are synchronous because they start one after another. Furthermore, process 1 is blocking because process 2 cannot start until it finishes execution.

This type of process is the first one you will learn. It is the simplest to understand and program. It’s the “whole life” type. Here, there is no asynchrony, no concurrency, nothing at all.

Concurrency

Concurrency refers to a system’s ability to execute multiple overlapping tasks in time. That is, they occur concurrently in time (hence the name).

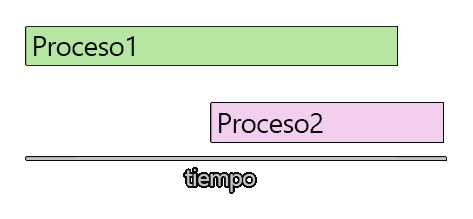

By definition, two tasks are concurrent if one of them starts between the start and end of the other. For example, like this:

By saying that two tasks are concurrent, we haven’t said anything about “how” that concurrency is going to be, nor how they will achieve it, or anything at all.

We are simply saying that for a time, both are executing. That is, we have literally just said they overlap temporally.

Parallelism and Semi-Parallelism

Now we arrive at parallelism and semi-parallelism. Here we are indeed talking about a concrete way to achieve concurrency, through the simultaneous execution of multiple processes.

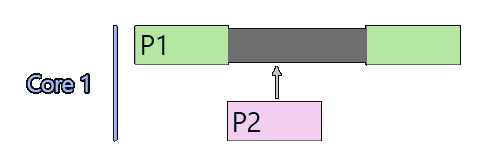

The first thing to remember is that, in general, a single-core processor can only execute one single process simultaneously. That’s how they are built and that’s how they function.

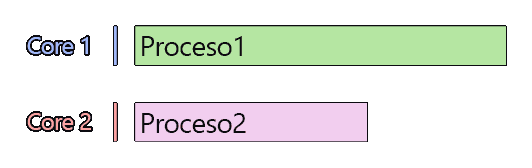

In the case where our processor has multiple cores, we can indeed achieve parallelism. This means that each core can take care of executing one of the processes.

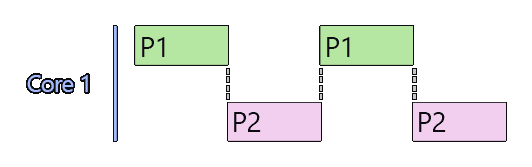

However, even when our processor does not have multiple cores to execute processes in parallel, we can still emulate it with semi-parallelism.

Basically, the processor switches from task to task, dedicating time to each one of them. The operating system takes care of this time sharing and task management.

In this way, an illusion is created that both are executing simultaneously.

Logically, with parallelism, the overall processing times are reduced. If you have two cores (let’s imagine they are equally powerful as if it were just one), the processing time will be shorter.

But even in the case of semi-parallelism, with a single core, processing times can be reduced. This is because many processes often have waits.

In that case, process 1 has a wait. Semi-parallelism allows the processor to interleave process 2 (or part of it) in between. Thus, you gain that time.

Asynchrony

Finally, we come to asynchrony. An asynchronous process is a process that is not synchronous (and I feel quite satisfied saying that). As we can see, it is a term that is too broad.

Concurrency is related to asynchrony, as is parallelism and semi-parallelism. Everything is more or less related and everything is asynchrony in the end.

But, in general, we usually call a process “asynchronous” when we have a process that involves a long wait or a long blocking. For example, waiting for a user to press a key, for a file to be read, or for a communication to be received.

So that these processes do not block the flow of the main program, they are launched with a concurrency mechanism to make them non-blocking. Thus, it is usually said that they have been launched asynchronously.

Formal Definition

Now that we have seen the different terms, let’s go for a slightly more rigorous definition of what each of them is.

Asynchrony

Execution model in which operations do not block the program flow and can be executed non-sequentially.

Concurrency

The ability of a system to manage multiple tasks that overlap (concur) in time.

Parallelism

Execution of multiple processes on different cores of a processor, allowing true simultaneous processing.

Semi-Parallelism

“Simulated” parallelism within the same core, which is performed by activating and pausing tasks, dedicating processor time to them alternately.

As we have seen, these are terms that are more or less simple, but they are related (and mixed with each other). What I said, more important than the words and the formal definition, is to know what they are and understand how they work.